Why has Chapman introduced PantherAI?

Graphic by Easton Clark, Photography Editor

Universities are in a sticky situation regarding artificial intelligence (AI). Fears about its impact on academic integrity have reached a boiling point, but institutions can’t afford to fall behind the curve. Chapman’s solution is called PantherAI.

The system, which was made available to all students and faculty at the start of this semester, is based on the open source code LibreChat. What that means is that students can access multiple models — GPT-4o, Claude and Gemini — on one centralized website.

It also means that Chapman saves money. The California State University system recently paid $17 million to give ChatGPT to all faculty and students. That wouldn’t have been viable for Chapman. However, with this agreement they’ll only pay a small amount based on the consumption of the product, according to Interim Vice President and Chief Information Officer Phillip Lyle.

“If the campus doesn’t really need it, we’re not really paying anything for it,” Lyle said. “I’m unable to share an exact (cost), but our budget is a tiny, tiny fraction of what it would cost for an equivalent site license with ChatGPT.”

It was Lyle’s direction that led to PantherAI’s creation. He has been involved in AI policy and discussions on campus for years, and helped create the initial guidelines about generative systems when ChatGPT came out.

Lyle said that the decision to move forward with PantherAI was all about flexibility, security and cost efficiency. There is no contract for the LibreChat system, meaning the school can move on if there are better or alternative solutions. Also, creating an account on sites like ChatGPT could leave users with data privacy risks.

“If you’re not careful in reviewing those agreements… it is possible that the data you are putting in will be used to train (the AI model),” Lyle said.

According to Lyle, nothing that students or faculty put into PantherAI will be used to train any sort of language model.

Another big plus that Lyle said he sees in LibreChat is the fact that Harvard University is a partner. It only emboldened his belief that it was worth using at Chapman.

For some students, however, there is a lack of trust in PantherAI.

Edsel Louis Tinoco, a freshman majoring in creative writing, said that he has had a ChatGPT account since it was still in beta tests. To him, it benefits universities to lean into AI rather than completely outlaw it and deprive students of its helpful capabilities. But he still has no intention of using PantherAI.

“I would be worried that Chapman would use my data to accuse me of cheating, because nothing is stopping them from doing so,” Tinoco said.

And perhaps he has a point. When looking at the terms of service for PantherAI, there are a few sentences that could arouse suspicion from a student wanting to use the service.

It reads: “Usage, including prompt information and responses, are recorded and stored within PantherAI. As is the case with all Chapman-owned systems — it is possible that authorized Chapman Information Systems and Technology (IS&T) staff members may see prompt or response details as part of a troubleshooting or debugging process.”

The fact that some members of Chapman can review that information, even if only for troubleshooting, could deter students from using PantherAI.

The website’s frequently asked questions section says PantherAI is also subject to the Computer and Network Acceptable Use policy.

That policy states: “Although Chapman University does not make a practice of monitoring e-mail The University reserves the right to retrieve the contents of University-owned computers or e-mail messages for legitimate reasons, such as to find lost messages, to comply with investigations of wrongful acts, to respond to subpoenas, or to recover from system failure.”

Lyle said that Chapman is going to be fluid with their terms around the system, and may remove some ambiguity in the language, but that nobody using the model should hold concerns about who can see their prompts. IS&T only plans to review data if there are errors in the system, and will only turn it over if they are legally required to do so.

Academic integrity is of high priority for the Chapman administration. In Dean of Students Jerry Price’s weekly email on Sept. 8, the first item had to do with AI usage and cheating, as the university has seen a big uptick in cases where students use generative programs in unethical ways.

With there being a larger crackdown on academic dishonesty related to AI, Tinoco said he doesn’t trust that PantherAI won’t be used to catch students cheating.

“I do not believe Chapman's promise that it won't use students’ data,” he said. “This is because it's safer to assume they will instead of believing their promise, then finding out that all collected data has, in fact, been used.”

Lyle said he could not confirm that the university’s administration would never request student data from PantherAI for an academic integrity case. He did, however, make it clear that he would not welcome them doing so.

“I would have significant concerns if our department was asked to use the system data to help justify an accusation of cheating,” Lyle said.

Lyle also said that he understands students’ potential fears about the system and that he plans on addressing those concerns to make them feel more comfortable with using it.

“At no point was detection of cheating ever discussed as a reason for the deployment of PantherAI, and to my knowledge, no faculty member or external department has inquired about this capability to date,” he said.

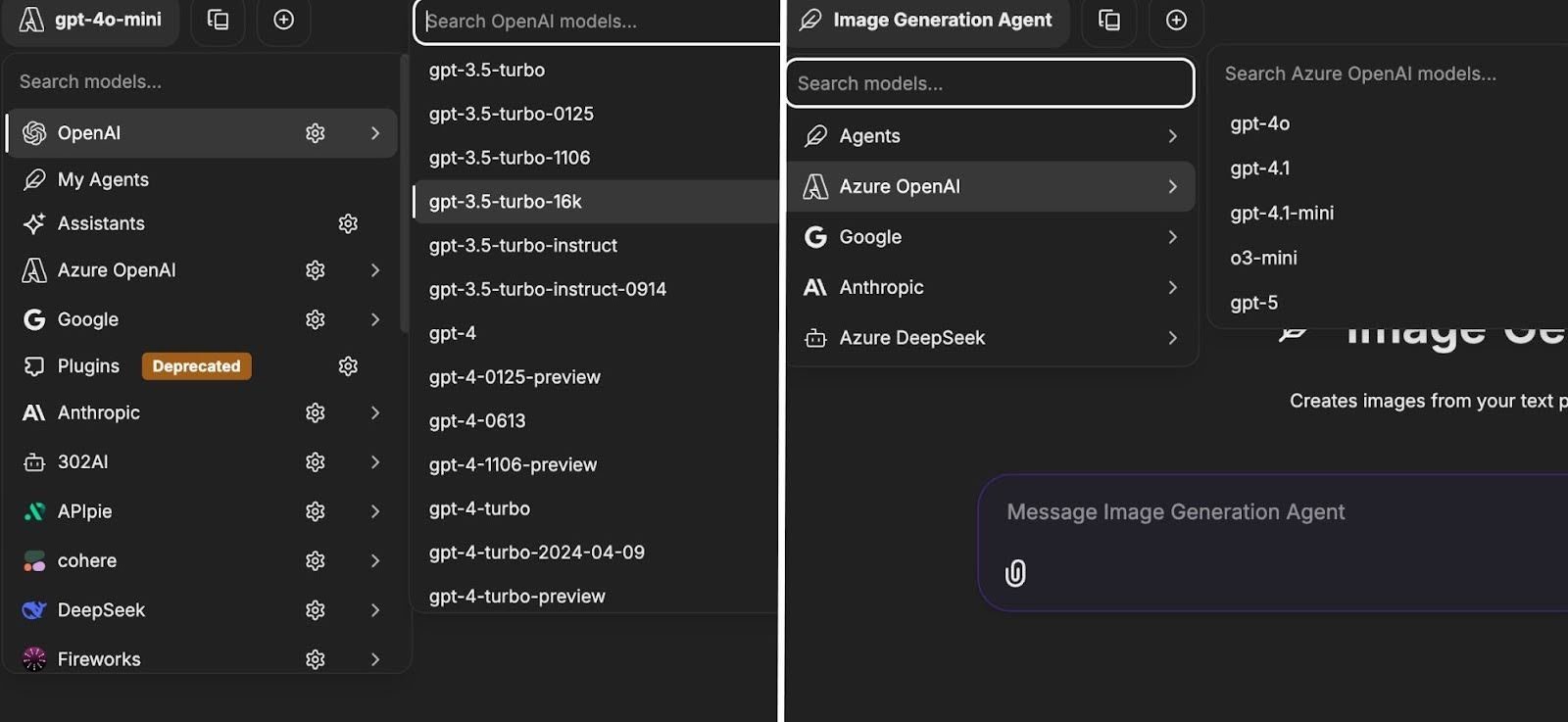

When looking at PantherAI and LibreChat, the systems have some small differences. Namely, LibreChat hosts more models and features than Chapman’s.

Pictured: LibreChat’s selection (left) versus PantherAI (right)

That raises the further question as to why a student should want to use PantherAI instead of going straight to the source. For Lyle, that all comes back to security.

“I feel it’s much safer for (students) to use PantherAI than going directly to an underlying provider,” he said.

Lyle also clarified that LibreChat requires you to subscribe to each service individually, and that Chapman has the ability to make more models available on their site for free.

Faculty at Chapman have been offered training and workshops about the system, but there was no official guidance given past readily available links on the internet. Multiple professors that The Panther reached out to said they didn’t have enough knowledge on the model to answer questions.

Chapman is working on updating its guidelines around AI usage and may further clarify the terms and conditions for PantherAI.